The use of data is essential to the intelligence process for better decision-making. Roy Lindelauf, coordinator of the Defence Data Science Centre makes his case. In his contribution, he shows what is already possible in the academic world.

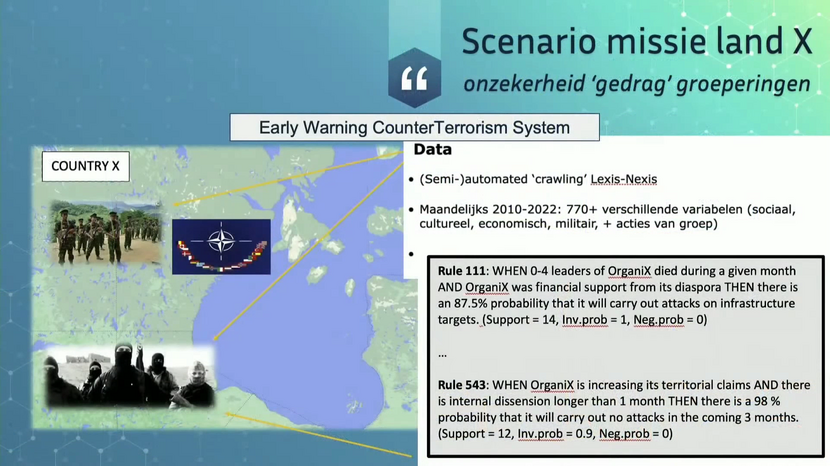

Imagine country ‘X’, where all kinds of things are happening. Numerous terrorist groups are carrying out attacks and destabilising the area. Insights have been gained into the country, and there is an ongoing NATO mission there. But how do you get a better picture of these groups? In this fictitious scenario a system exists in which 770 variables have been gathered over a ten year period – for example, concerning social, political and economic information. These large quantities of data are analysed using an algorithm, yielding a number of ‘rules’. These ‘rules’ say something about the chance of a specific group carrying out an attack on a strategically located port in country X, for instance.

Internal divisions

One of the rules states that if between 0 and 4 leaders of this group have been killed, and if financial support is being received from other countries, the chance of an attack is very high. Another rule states that if there are internal divisions, the chance that an attack will occur within three months is low. These rules are presented to a ‘domain expert’ who devises a number of policy options. In response to the first rule it is decided to employ ‘predictive patrolling’. In addition, a ‘deep fake’ video is made in which leaders of the group make certain statements resulting in divisions.

Preventing attacks

In the meantime, other algorithms are running in the background – to screen maritime traffic, for example. This screening occurs on the basis of publicly-available data, and reveals that two ships en route to the strategically located port in country X are exhibiting suspicious behaviour. A real-time analysis is then made of the people and companies active in the port. This concerns a network of 70,000 people and businesses, many of them unknown. With the assistance of machine learning, a ‘suspicious score’ is generated, producing a list of a hundred potential suspects. Based on this, a special forces team makes a number of arrests and finds explosives. A large-scale attack is prevented.

‘We are grappling with the question of whether you can simply develop and employ all these capabilities without any caveats’

Ethical principles

According to Roy Lindelauf, this scenario is closer than we think. ‘All the models already exist in the academic world, but it may take some time before the intelligence services can work with them. However we are grappling with the question of whether you can simply develop and employ all these capabilities without any caveats. We are seeing that a specialist field is now developing in which ethical principles, such as privacy and transparency, are included in algorithms. These algorithms make it possible to dispel the fog using data-driven intelligence, while at the same time adhering to certain standards and values’.